The 10 Year Anniversary of the HealthCare.gov Rescue

Ten years ago today, on Friday, October 18, 2013, the effort to fix HealthCare.gov began in earnest. At 7:15 A.M. Eastern time, a small group assembled next to the entrance to the West Wing of the White House. The group included Todd Park, Brian Holcomb, Gabriel Burt, Ryan Panchadsaram, Greg Gershman, and myself. Later in the day we were joined by Mikey Dickerson via a long-running speakerphone call. Some of us were from outside of government (Gabe, Brian, Mikey, and me), and the others had jobs in government at the time (Todd, Ryan, and Greg). What we all had in common was that we were experienced technologists, having been at startups or at large established technology organizations.

The members of our group were selected by Todd, working with Greg and Ryan and others behind the scenes to identify people who could help because they had that kind of technology experience. HealthCare.gov, having launched days earlier on October 1, 2013, wasn't working. From the vantage from the top of the political leadership in the country, it was clear outside help was needed. Todd, the CTO of the United States at the time, which is a position in the White House, was tapped to help fix it. His plan was to provide reinforcements to complement the team of government employees and contractors that had built HealthCare.gov and were in the midst of operating it. We were to be a small team, very discreet. Todd was our leader. It was already a high-pressure, stressful situation, so insertion into that context meant melding in, not blowing things up. It was to be a low-key mission of information gathering and assessment, not the cavalry storming in. Todd told us the next 30 days would be critical. The goals were to enroll 7 million people by March 31, 2014, the end of the period known as open enrollment. When the media was eventually informed of our existence by the White House, we were referred to as "the tech surge".

When Todd called me two days prior on October 16 to ask me if I would join the effort, he didn't have to explain the stakes. I understood what it meant for the website to work. I immediately agreed, and put on hold what I was doing, which happened to be raising money for a startup I had founded. I took Todd's call while walking the grounds of the Palace of Fine Arts in San Francisco, having met with VCs earlier in the day. I was living in Baltimore at the time with my wife and toddler daughter. I flew back home right away, and before I knew it I was taking the earliest morning train I could from Camden Yards to DC's Union Station. I thought I had timed it right, but still wound up running across Pennsylvania Avenue so as not to be late.

We couldn't have started any sooner even if we had wanted to. The federal government had shut down on October 1, the same day HealthCare.gov had launched. The shutdown prevented anyone from outside the main team working on HealthCare.gov from coming in to help. So while days passed with the news dominated by the twin stories of the shutdown and the slow-moving catastrophe of the launch, a vacuum of information formed, as well as a surplus of speculation and worry. The White House couldn't figure out what was wrong with it, and the implications of it failing were troubling. The Affordable Care Act, the signature domestic policy achievement of President Obama's tenure, had gone into effect, and the website was to be the main vehicle for delivering the benefits of the law to millions of people. If they didn't know what was wrong with HealthCare.gov, other than that it was manifestly not working, plain for everyone to see, and therefore they might not be able to fix it, what would that mean for the the fate of health care reform? Fortunately, the shutdown ended on October 17, which meant we could get to work and finally understand what was going on.

Some of us already knew each other, but everyone was new to someone else. We introduced ourselves, headed inside, and after breakfast in the Navy Mess, headed upstairs to the Chief of Staff's office. Denis McDonough shook our hands as we somewhat awkwardly stood in a line. He asked us directly, "can you fix it"? In our nervous energy, I remember some of us blurting out, "yes". We had confidence, but we also were eager to dive in, learn as much as we could, and get going.

A van was procured, along with a driver. We piled in and headed across the Mall to the Hubert H. Humphrey Building, headquarters of the US Department of Health & Human Services (HHS). Entering the lobby, we passed Secretary Kathleen Sebelius. She wasn't there for us, she was welcoming federal employees back to work after the shutdown. Our meeting there was with Marilyn Tavenner and her staff. Tavenner was the Administrator of the Centers for Medicare & Medicaid Services (CMS), the largest organization in HHS, and the owner of HealthCare.gov.

Our meeting with Tavenner and her team yielded our first details of the system that was HealthCare.gov. We learned how it was structured and what its main functional components were. We heard first hand what CMS leadership knew about what was wrong, or at least where in the system they could see that things were not working. It was our first sense of the size and complexity of the site, both in terms of functionality, but also in terms of the number of contractors, sub-components, and business rules such as for eligibility. But remember, these were not technical people - they were health policy experts and administrators. Most of the things they were reporting to us were business metrics about the site, descriptions of high-level performance. It was helpful to hear this perspective, and indeed many of these metrics would drive our later work. But this would not yet be the time to learn about the technical challenges the team was facing.

Our morning continued back in the van, leaving DC for CMS headquarters in Woodlawn, Maryland, just outside Baltimore, a 45-minute drive. Here we met with the leadership of HealthCare.gov itself, a group of people including Michelle Snyder, CMS's COO, Henry Chao, one of its main architects, and Dave Nelson, a director with a telecom background, who was being elevated and would oversee much of the rescue work from CMS's perspective. The sketchy picture of HealthCare.gov we had was coming into greater relief. We learned about deployment challenges and bottlenecks, more about where specifically users were getting "stuck" using the site, and we started to hear bits and pieces about the particular technologies being used, including something called MarkLogic, an XML database, which was new to us. We even started to get some details about the deployment architecture and the types of servers involved. Again, the theme of complexity stood out. But we were also still talking at a fairly high level. To really understand what was wrong with HealthCare.gov, we'd have to move on.

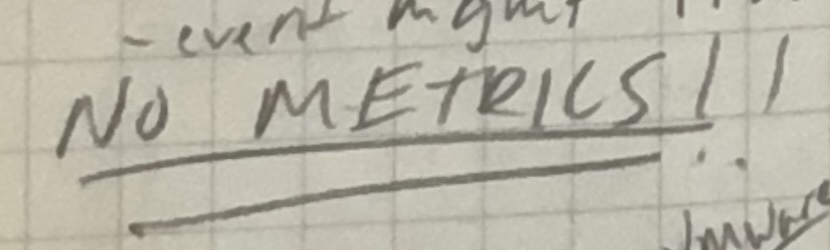

The afternoon was spent a few miles down the road back toward DC in Columbia, Maryland, at something called the XOC, or Exchange Operations Center. This was to be the mission-control-style hub of operations for HealthCare.gov. We found a room staffed with a few contractors and some CMS employees. (The XOC eventually would be the site of so much activity during the rescue that a scheme to keep people out was needed.) Here was where, away from the leadership-filled rooms, we finally heard from technologists who were directly working on the site. What we heard was troubling. There was a lack of confidence in making changes to the source code. A complex code generation process governed much of the site and produced huge volumes of code, requiring careful coordination between teams. Testing was largely a manual process. Releases and deployment required lengthy overnight downtimes and often failed, required equally-lengthy rollbacks. The core data layer lacked a schema. Provisioning hosting resources was slow and not automated. Critically, there was a pervasive lack of monitoring of the site. No APM, no bog-standard metrics about CPU, memory, disk, network, etc., that could be viewed by anyone on the team. By this point, the looks we exchanged amongst ourselves betrayed our fears that the situation was much worse than we initially expected. I carried with me that day a Moleskin notebook that I furiously scribbled in so as not to forget what I was hearing. You can see as the day worn on my panic start to rise reflected in my writing.

With that dose of reality and the evening setting in, we collected ourselves back in the van and set off for our final field trip of the day, to suburban Virginia for the offices of CGI, the prime contractor of HealthCare.gov. In Herndon, we were greeted by many people on their leadership team and the technical teams who had built the site, even though it was getting on past business hours at this point. This was as much an interview of us by them as it was a chance for us to ask questions. We had a brief opportunity to ingratiate ourselves and win their trust. We did that in part by showing our eagerness to dig in on some of the things we had learned earlier in the day, proving our engineering bona fides (specifically with high-traffic websites), and emphasizing that we were not there for any other reason than to help. This was a team on edge, under the gun and exhausted. We needed them to succeed, and they weren't going to work with us if they thought we were there to cast blame.

We asked many questions, but they mostly boiled down to, what's wrong with the site, and how do you know what's wrong? Show us where in the system this or that component isn't performing the way you expected. And they and CMS mostly couldn't do that. They had daily reporting and analytics that produced those high level business metrics. But again there was that lack of monitoring of the system itself, real-time under load. So we focused on that.

It was getting late. Could we throw a Hail Mary? Walk out of that building leaving behind something of tangible value, something promising they could build on? We said, there's lots of APM-style monitoring services, but we're familiar with New Relic. Could we install it on a portion of the servers? Yes, we know there's a complicated and fragile release process, but if we bypassed that and just directly connected to some of the machines, we could install the agent on them and be receiving metrics almost immediately. Glances were exchanged. A CMS leader in the room made a call on their cell phone - we actually have some New Relic licenses already, we can use those. There was also a hestitancy to see if this kind of extraordinary, out-of-the-norm request would be approved - clearly, even during this period of turmoil, all the stakeholders stuck to the regular release script. The CMS leader nodded their approval. A small group assembled to marshall the change through. Many folks had stuck around, even though it was nearing midnight. Then on a flatscreen in the conference room, we pulled up the New Relic admin, and within moments, the "red shard" (the subset of servers we had chosen for this test) was reporting in. And there it was - we could see clearly the spike in request latency, even at this late hour, that indicated a struggling website, along with error rate, requests per minute, and other critical details that had basically been invisible to the team to that point. Imagine a hospital ICU ward without an EKG monitor. Now that they knew exactly in what ways it was bad, they could start to correlate them with the business metrics and other aspects of the site that needed improving. They could then prioritize the fixes and actions that would yield the biggest improvements.

That would come later. For now, exhausted, well past midnight, we left Herndon and rode the van back to DC. At roughly 2 A.M. we reconvened in the Roosevelt Room in a completely silent White House for a quick debrief. As we reflected on what we had just experienced, another thought was settling in over the group - that this was obviously not over by any stretch, that none of us were going home any time soon, that the challenge was much larger in scope than we had imagined, that any notion we may have had at the outset of possibly just offering some suggested fixes and moving on was in retrospect hilariously naive, that this was all we were going to be doing for the foreseeable future until the site was turned around. Indeed, we all managed to find a few hours of sleep in a nearby hotel and then were right back at it in the morning, heading straight out to Herndon.

This was just day one, a roughly 18-hour day, and it certainly wasn't the last such marathon. Over the next two-and-a-half months until the end of December, the tech surge expanded and took on new team members, experienced many remarkable events and surprises, and ultimately, successfully helped turn HealthCare.gov around. Millions enrolled in health care coverage that year, many for the first time. I hope to tell more stories of how that happened over the next few weeks and months.